Computing perievent#

This tutorial demonstrates how we use Pynapple on various publicly available datasets in systems neuroscience to streamline analysis. In this tutorial, we will examine the dataset from Zheng et al (2022), which was used to generate Figure 4c in the publication.

The NWB file for the example used here is provided in this repository. The entire dataset can be downloaded here.

import matplotlib.pyplot as plt

import numpy as np

import pynapple as nap

import seaborn as sns

Stream the data from DANDI#

from pynwb import NWBHDF5IO

from dandi.dandiapi import DandiAPIClient

import fsspec

from fsspec.implementations.cached import CachingFileSystem

import h5py

# Enter the session ID and path to the file

dandiset_id, filepath = ("000207", "sub-4/sub-4_ses-4_ecephys.nwb")

with DandiAPIClient() as client:

asset = client.get_dandiset(dandiset_id, "draft").get_asset_by_path(filepath)

s3_url = asset.get_content_url(follow_redirects=1, strip_query=True)

# first, create a virtual filesystem based on the http protocol

fs = fsspec.filesystem("http")

# create a cache to save downloaded data to disk (optional)

fs = CachingFileSystem(

fs=fs,

cache_storage="nwb-cache", # Local folder for the cache

)

# next, open the file

file = h5py.File(fs.open(s3_url, "rb"))

io = NWBHDF5IO(file=file, load_namespaces=True)

/home/runner/.local/lib/python3.12/site-packages/hdmf/spec/namespace.py:583: UserWarning: Ignoring the following cached namespace(s) because another version is already loaded:

core - cached version: 2.5.0, loaded version: 2.8.0

The loaded extension(s) may not be compatible with the cached extension(s) in the file. Please check the extension documentation and ignore this warning if these versions are compatible.

self.warn_for_ignored_namespaces(ignored_namespaces)

Parsing the data#

The first step is to load the data from the Neurodata Without Borders (NWB) file. This is done as follows:

custom_params = {"axes.spines.right": False, "axes.spines.top": False}

sns.set_theme(style="ticks", palette="colorblind", font_scale=1.5, rc=custom_params)

data = nap.NWBFile(io.read()) # Load the NWB file for this dataset

# What does this look like?

print(data)

4

┍━━━━━━━━━━━━━━━━━━━━━━━━━━┯━━━━━━━━━━━━━┑

│ Keys │ Type │

┝━━━━━━━━━━━━━━━━━━━━━━━━━━┿━━━━━━━━━━━━━┥

│ units │ TsGroup │

│ timediscrimination_table │ IntervalSet │

│ recognition_table │ IntervalSet │

│ encoding_table │ IntervalSet │

│ experiment_ids │ Tsd │

│ events │ Tsd │

┕━━━━━━━━━━━━━━━━━━━━━━━━━━┷━━━━━━━━━━━━━┙

Get spike timings

spikes = data["units"]

What does this look like?

print(spikes)

Index rate x y z imp location filtering ...

------- ------- ----- ------ ------ ----- ----------------- ----------- -----

0 7.0009 26.63 -15.83 -16.49 nan hippocampus_right 300-3000Hz ...

1 7.24478 26.63 -15.83 -16.49 nan hippocampus_right 300-3000Hz ...

2 6.09486 26.63 -15.83 -16.49 nan hippocampus_right 300-3000Hz ...

3 6.91548 26.63 -15.83 -16.49 nan hippocampus_right 300-3000Hz ...

4 0.40162 26.63 -15.83 -16.49 nan hippocampus_right 300-3000Hz ...

5 0.46117 26.63 -15.83 -16.49 nan hippocampus_right 300-3000Hz ...

6 1.81066 26.63 -15.83 -16.49 nan hippocampus_right 300-3000Hz ...

... ... ... ... ... ... ... ... ...

28 1.13432 26.63 -15.83 -16.49 nan hippocampus_right 300-3000Hz ...

29 0.60013 26.63 -15.83 -16.49 nan hippocampus_right 300-3000Hz ...

30 0.18433 26.63 -15.83 -16.49 nan hippocampus_right 300-3000Hz ...

31 2.3282 26.63 -15.83 -16.49 nan hippocampus_right 300-3000Hz ...

32 0.31052 26.63 -15.83 -16.49 nan hippocampus_right 300-3000Hz ...

33 0.69087 26.63 -15.83 -16.49 nan hippocampus_right 300-3000Hz ...

34 0.65578 26.63 -15.83 -16.49 nan hippocampus_right 300-3000Hz ...

This TsGroup has, among other information, the mean firing rate of the unit, the X, Y and Z coordinates, the brain region the unit was recorded from, and the channel number on which the unit was located.

Next, let’s get the encoding table of all stimulus times, as shown below:

encoding_table = data["encoding_table"]

# What does this look like?

print(encoding_table)

index start end fixcross_time ExperimentID boundary1_time boundary2_time boundary3_time stimCategory ...

0 1.06846275 9.10133 0.06 70.0 5.07 nan nan 0 ...

1 10.18234225 18.1145585 9.12 70.0 12.88 14.43 nan 1 ...

2 19.071072 27.13738775 18.13 70.0 23.07 nan nan 2 ...

3 28.1604755 36.390234 27.15 70.0 32.16 nan nan 0 ...

4 37.470909 44.5844975 36.41 70.0 41.47 nan nan 0 ...

5 45.5747065 53.5579785 44.6 70.0 49.57 nan nan 0 ...

6 54.58672125 62.78414525 53.58 70.0 56.07 nan nan 1 ...

... ... ... ... ... ... ... ... ... ...

83 868.08706525 876.1916395 867.0 70.0 868.64 869.92 871.67 1 ...

84 883.93252325 891.72896825 882.97 70.0 887.93 886.18 890.21 2 ...

85 892.75523325 900.92109225 891.75 70.0 898.28 nan nan 1 ...

86 901.9068505 910.17197875 900.94 70.0 905.91 nan nan 2 ...

87 911.1383965 919.33594975 910.19 70.0 915.14 912.7 917.07 2 ...

88 920.36395025 928.32984475 919.35 70.0 921.91 923.48 924.92 1 ...

89 930.6396355 939.07302925 929.72 70.0 933.0 934.19 nan 1 ...

shape: (90, 2), time unit: sec.

This table has, among other things, the scene boundary times for which we will plot the peri-event time histogram (PETH).

There are 3 types of scene boundaries in this data. For the purposes of demonstration, we will use only the “No boundary” (NB) and the “Hard boundary” (HB conditions). The encoding table has a stimCategory field, which tells us the type of boundary corresponding to a given trial.

stimCategory = np.array(

encoding_table.stimCategory

) # Get the scene boundary type for all trials

# What does this look like?

print(stimCategory)

[0 1 2 0 0 0 1 0 2 1 2 0 2 0 1 1 1 1 0 0 2 0 0 2 0 1 0 2 0 0 2 0 0 0 0 2 2

2 0 0 1 1 1 1 0 2 1 1 0 2 1 0 2 2 2 0 1 0 1 1 2 2 0 2 2 2 1 1 2 1 0 2 2 1

0 1 2 0 2 2 1 1 1 1 2 1 2 2 1 1]

Trials marked 0 correspond to NB, while trials marked 2 correspond to HB. Let’s extract the trial numbers for NB and HB trials, as shown below:

indxNB = np.where(stimCategory == 0) # NB trial indices

indxHB = np.where(stimCategory == 2) # HB trial indices

The encoding table also has 3 types of boundary times. For the purposes of our demonstration, we will focus on boundary1 times, and extract them as shown below:

boundary1_time = np.array(encoding_table.boundary1_time) # Get timings of Boundary1

# What does this look like?

print(boundary1_time)

[ 5.06846275 12.88060075 23.071072 32.1604755 41.470909

49.5747065 56.07442325 72.803867 82.1299925 92.77667275

99.9925845 109.0787315 118.0778575 133.12435825 140.94827125

147.8236585 160.726736 170.441769 183.29262575 191.11327175

199.63382425 208.5142425 217.6186295 232.62687825 241.7270365

250.58327775 259.5545145 268.6661045 278.026902 287.04517875

301.2426685 310.21093375 319.1822215 328.13037175 337.16751875

363.737004 372.785663 381.86749625 391.2704085 400.5077995

407.28660475 416.0272855 431.792285 441.75272875 448.88401175

456.97766275 463.88864475 472.13035175 490.53584775 499.7064625

507.6917495 518.78793775 528.140675 537.1412335 552.5116415

562.4287995 569.44012625 580.99649325 591.974217 597.74463025

608.3638655 624.44389125 633.4175285 642.3969695 651.776966

660.699774 676.9439885 681.3602465 692.799878 699.53861175

711.341688 720.4819275 729.43782175 772.99961425 780.19490625

788.1175045 798.34264525 807.54519 817.25781375 835.1736475

842.54760125 852.294991 861.40924725 868.63873425 887.93252325

898.284193 905.9068505 915.1383965 921.90619325 932.99710575]

This contains the timings of all boundaries in this block of trials. Note that we also have the type of boundary for each trial. Let’s store the NB and HB boundary timings in separate variables, as Pynapple Ts objects:

NB = nap.Ts(boundary1_time[indxNB]) # NB timings

HB = nap.Ts(boundary1_time[indxHB]) # HB timings

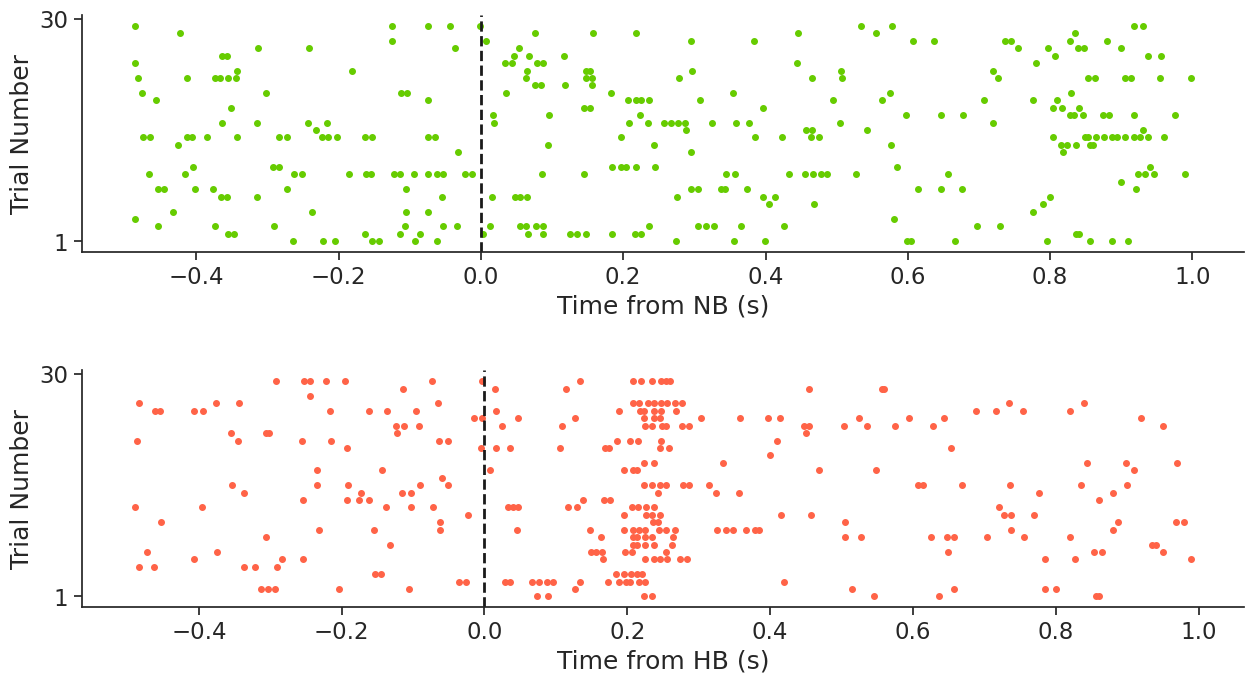

Peri-Event Time Histogram (PETH)#

A PETH is a plot where we align a variable of interest (for example, spikes) to an external event (in this case, to boundary times). This visualization helps us infer relationships between the two.

For our demonstration, we will align the spikes of the first unit, which is located in the hippocampus, to the times of NB and HB. You can do a quick check to verify that the first unit is indeed located in the hippocampus, we leave it to you.

With Pynapple, PETHs can be computed with a single line of code!

NB_peth = nap.compute_perievent(

spikes[0], NB, minmax=(-0.5, 1)

) # Compute PETH of unit aligned to NB, for -0.5 to 1s windows

HB_peth = nap.compute_perievent(

spikes[0], HB, minmax=(-0.5, 1)

) # Compute PETH of unit aligned to HB, for -0.5 to 1s windows

Let’s plot the PETH

plt.figure(figsize =(15,8))

plt.subplot(211) # Plot the figures in 2 rows

plt.plot(NB_peth.to_tsd(), "o",

color=[102 / 255, 204 / 255, 0 / 255],

markersize=4)

plt.axvline(0, linewidth=2, color="k", linestyle="--") # Plot a line at t = 0

plt.yticks([0, 30]) # Set ticks on Y-axis

plt.gca().set_yticklabels(["1", "30"]) # Label the ticks

plt.xlabel("Time from NB (s)") # Time from boundary in seconds, on X-axis

plt.ylabel("Trial Number") # Trial number on Y-axis

plt.subplot(212)

plt.plot(HB_peth.to_tsd(), "o",

color=[255 / 255, 99 / 255, 71 / 255],

markersize=4) # Plot PETH

plt.axvline(0, linewidth=2, color="k", linestyle="--") # Plot a line at t = 0

plt.yticks([0, 30]) # Set ticks on Y-axis

plt.gca().set_yticklabels(["1", "30"]) # Label the ticks

plt.xlabel("Time from HB (s)") # Time from boundary in seconds, on X-axis

plt.ylabel("Trial Number") # Trial number on Y-axis

plt.subplots_adjust(wspace=0.2, hspace=0.5, top=0.85)

Awesome! From the PETH, we can see that this neuron fires after boundary onset in HB trials. This is an example of what the authors describe here as a boundary cell.

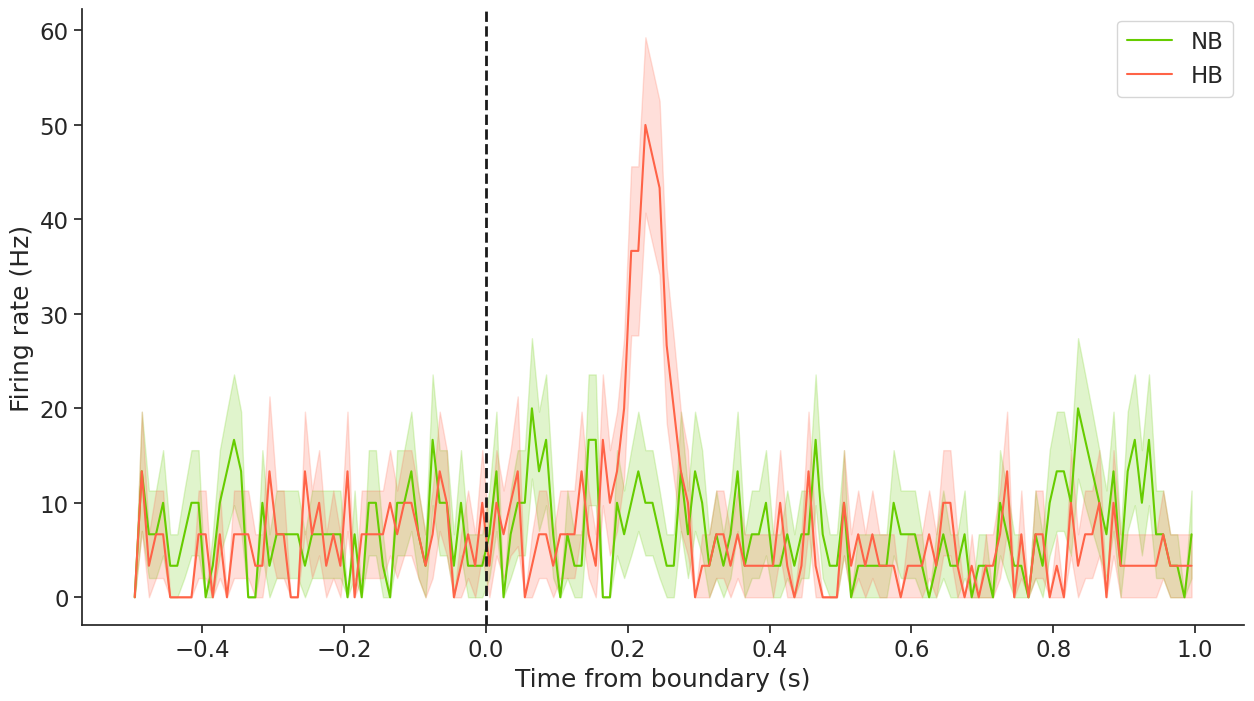

PETH of firing rate for NB and HB cells#

Now that we have the PETH of spiking, we can go one step further. We will plot the mean firing rate of this cell aligned to the boundary for each trial type. Doing this in Pynapple is very simple!

Use Pynapple to compute binned spike counts

bin_size = 0.01

counts_NB = NB_peth.count(bin_size) # Spike counts binned in 10ms steps, for NB trials

counts_HB = HB_peth.count(bin_size) # Spike counts binned in 10ms steps, for HB trials

Compute firing rate for both trial types

fr_NB = counts_NB / bin_size

fr_HB = counts_HB / bin_size

Smooth the firing rate with a gaussian window with std=4*bin_size

counts_NB = counts_NB.smooth(bin_size*4)

counts_HB = counts_HB.smooth(bin_size*4)

Compute the mean firing rate for both trial types

meanfr_NB = fr_NB.mean(axis=1)

meanfr_HB = fr_HB.mean(axis=1)

Compute standard error of mean (SEM) of the firing rate for both trial types

from scipy.stats import sem

error_NB = sem(fr_NB, axis=1)

error_HB = sem(fr_HB, axis=1)

Plot the mean +/- SEM of firing rate for both trial types

plt.figure(figsize =(15,8))

plt.plot(

meanfr_NB, color=[102 / 255, 204 / 255, 0 / 255], label="NB"

) # Plot mean firing rate for NB trials

# Plot SEM for NB trials

plt.fill_between(

meanfr_NB.index.values,

meanfr_NB.values - error_NB,

meanfr_NB.values + error_NB,

color=[102 / 255, 204 / 255, 0 / 255],

alpha=0.2,

)

plt.plot(

meanfr_HB, color=[255 / 255, 99 / 255, 71 / 255], label="HB"

) # Plot mean firing rate for HB trials

# Plot SEM for NB trials

plt.fill_between(

meanfr_HB.index.values,

meanfr_HB.values - error_HB,

meanfr_HB.values + error_HB,

color=[255 / 255, 99 / 255, 71 / 255],

alpha=0.2,

)

plt.axvline(0, linewidth=2, color="k", linestyle="--") # Plot a line at t = 0

plt.xlabel("Time from boundary (s)") # Time from boundary in seconds, on X-axis

plt.ylabel("Firing rate (Hz)") # Firing rate in Hz on Y-axis

plt.legend(loc="upper right")

<matplotlib.legend.Legend at 0x7f26b8303b60>

This plot verifies what we visualized in the PETH rasters above, that this cell responds to a hard boundary. Hence, it is a boundary cell. To learn more about these cells, please check out the original study here.

I hope this tutorial was helpful. If you have any questions, comments or suggestions, please feel free to reach out to the Pynapple Team!

Authors

Dhruv Mehrotra

Guillaume Viejo